DeepSeek releases a new AI image model

More topics: Mistral AI releases a latency-optimized model | Block unveils an open-source AI agent platform

Hi AI Enthusiasts,

Welcome to this week’s Magic AI News, where we present you the most exciting AI news of the week. Today, we’re talking about DeepSeek’s new AI image model and Mistral’s new latency-optimized model for local use.

In addition, we are looking at Block’s new on-machine AI agent platform that can automate engineering tasks seamlessly. And the best it’s open-source. We’re impressed! 😎

This week’s Magic AI tool is perfect for you looking for an AI solution to automate your content workflow. For example, this tool can turn your audio into content.

Let’s explore this week’s AI news together. 👇🏽

Top AI news of the week

🌉 DeepSeek releases a new AI image model

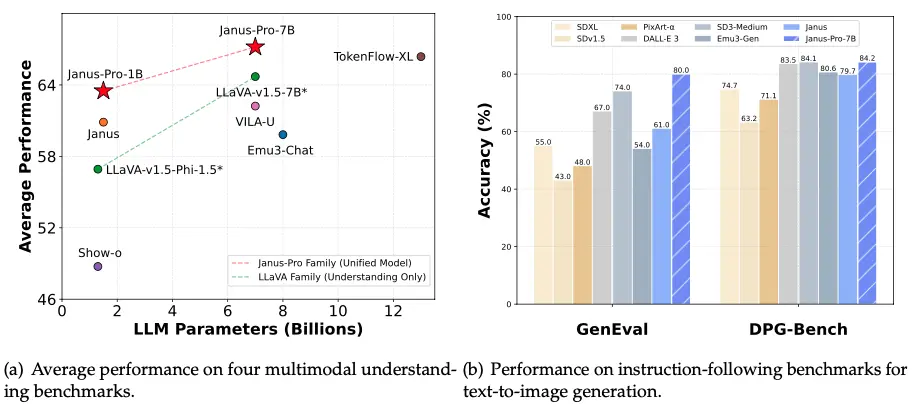

The Chinese AI startup DeepSeek has launched the Janus-Pro model family, a new set of open-source multimodal models. The biggest model can compete with image generators like DALL-E3 and Stable Diffusion. Last week, DeepSeek had already shaken up the AI world and the stock markets with its R1 model.

The details:

- The new Janus-Pro models have 1.3B and 7B parameters and can generate high-quality images from text descriptions.

- According to DeepSeek, Janus-Pro outperforms DALL-E3 and Stable Diffusion in key industry benchmarks such as GenEval and DPG-Bench (See image below).

- The code for the models is open-source under the MIT license. The use of the models is subject to the DeepSeek model license. In addition, the new models are available on HuggingFace.

Our thoughts

Everyone in the AI community is currently talking about DeepSeek. According to DeepSeek, DeepSeek R1 was trained more effectively than Llama 3 or OpenAI’s o1. That raises the question of whether we need as much computing power for training large-language models as American tech companies claim.

In addition, there are growing doubts about DeepSeek’s statements. However, one thing is clear: Large Language Models are now mass-products, and anyone can use open-source models to create great applications.

More information: 🔗 DeepSeek | Heise Online

🚀 Mistral AI releases a latency-optimized model

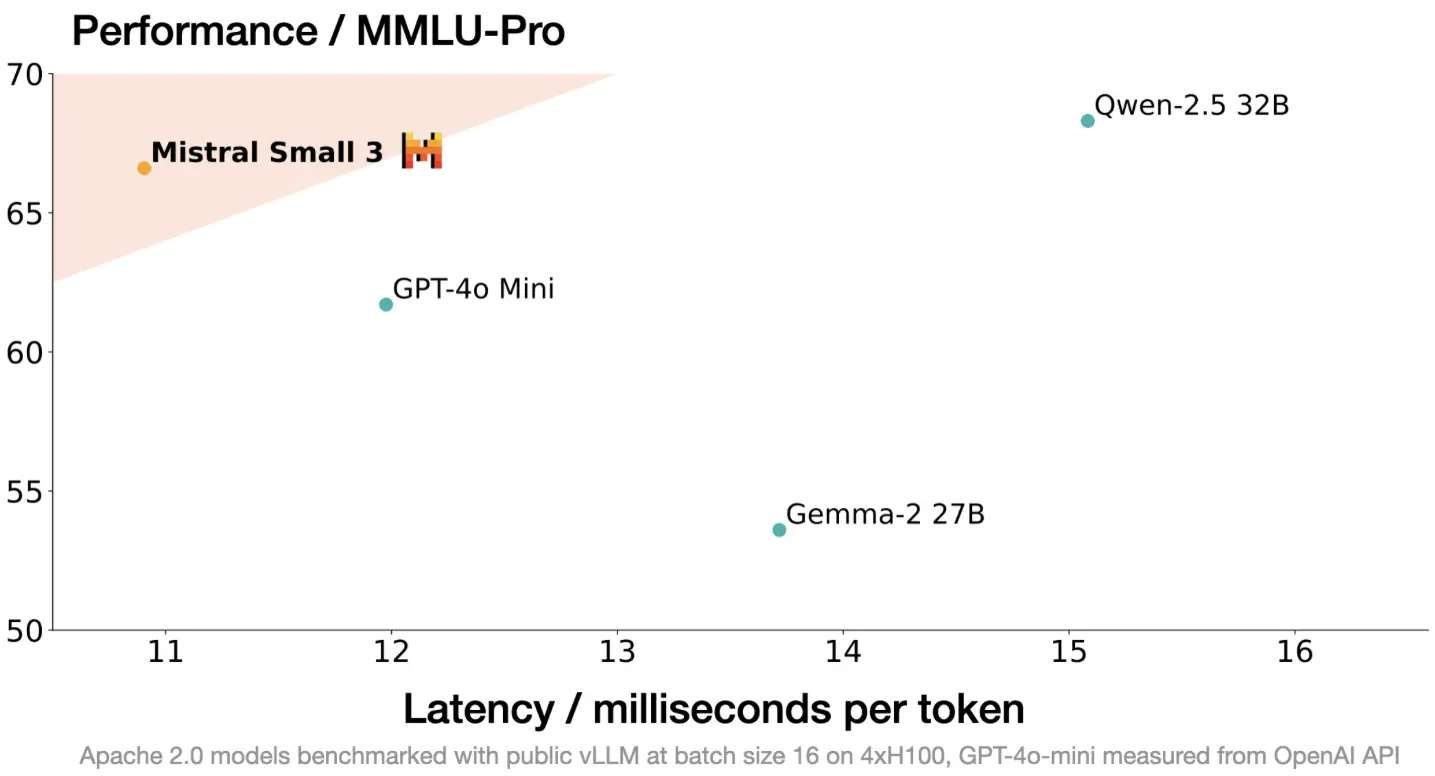

The French AI startup Mistral AI launched Mistral Small 3, an open-source and latency-optimized 24B parameter model.

The details:

- Mistral Small 3 competes with larger models like Llama 3.3 70B or Qwen-2.5 32B. It is also an excellent open alternative to proprietary models like GPT4o-mini.

- In addition, this new Mistral model is more than three times faster on the same hardware as Llama 3.3 70B.

- Mistral Small 3 is designed to work well for local use cases. It has far fewer layers than competing models, and with a latency of 150 tokens/s, Mistral Small 3 is currently the most efficient model in its category.

- The model is released under the Apache 2.0 license and is available on HuggingFace (base model) and Ollama. According to Mistral, the open-source community should use the model as a base for creating new models.

Our thoughts

As Europeans, we are always happy when Mistral introduces new models. As one of the few AI startups in Europe, it can compete with the global players.

Mistral Small 3 is perfect for tasks that require robust language and instruction following performance with very low latency. We are confident that the model will benefit the open-source community.

More information: 🔗 Mistral AI

🤖 Block unveils an open-source AI agent platform

Jack Dorsey’s fintech company, Block, launched an open-source AI agent framework called Goose to assist with engineering tasks.

The details:

- The platform supports any LLM backend, including OpenAI and Ollama. With Ollama, you can use Goose 100% locally. So you have the control over your data.

- In addition, you can customize Goose with extensions. The extensions are based on Anthropic’s open-source Model Context Protocol (MCP). It is an open protocol for the seamless integration between LLM applications and external data sources and tools.

- As a software engineer, you can use Goose for code migration, test generation, or bug fixing! It’s like your personal coding assistant with access to a specific directory on your computer.

- The framework was published under the Apache 2.0 license, allowing commercial use and modification.

Our thoughts

We tried Goose for you, and we are impressed. As a data scientist and software engineer, we find the tool very helpful. It’s like our personal coding assistant that handles small tasks for us. It’s great that this tool is open-source. We will keep using it!

More information: 🔗 Goose | Goose Extensions

Magic AI tool of the week

Do you find writing meeting summaries or follow-up e-mails tedious? Then, you should check out the tool CastMagic! This tool turns your audio into content.

Imagine you have a sales call. Then, you need a summary of the call, including the customer’s name, asked questions, sentiment, next steps, and so on. Right!

CastMagic can do all of this tedious work for you, and you can focus on the conversation with your customer. It’s a win for you and your customers.

Articles of the week

- Our Journey to Become a Data Scientist

- Build an AI Finance Agent Team with phidata

- Build an AI Investment Agent with Chainlit and phidata

- Visualize financial data with beautiful Sankey diagrams

💡 Do you enjoy our content and want to read super-detailed articles about AI? If so, subscribe to our blog and get our popular data science cheat sheets for FREE.

Thanks for reading, and see you next time.

- Tinz Twins