Google launches its open-source model Gemma 2!

More topics: OpenAI presents CriticGPT to improve ChatGPT’s code output and Efficient AI language models without matrix multiplication

Hi AI Enthusiasts,

Welcome to this week’s Magic AI News, where we present you the most exciting AI and tech news of the week. We organize the updates for you and share our thoughts with you!

This week’s Magic AI tool is a must-know tool for all podcast fans! Stay curious! 😎

Let’s explore this week’s AI news together. 👇🏽

Top AI news of the week

💭 Google launches its open-source model Gemma 2

Google Gemma 2 is now available for developers. Gemma is a family of lightweight, state-of-the-art open models based on the same technology used to create Google’s Gemini models.

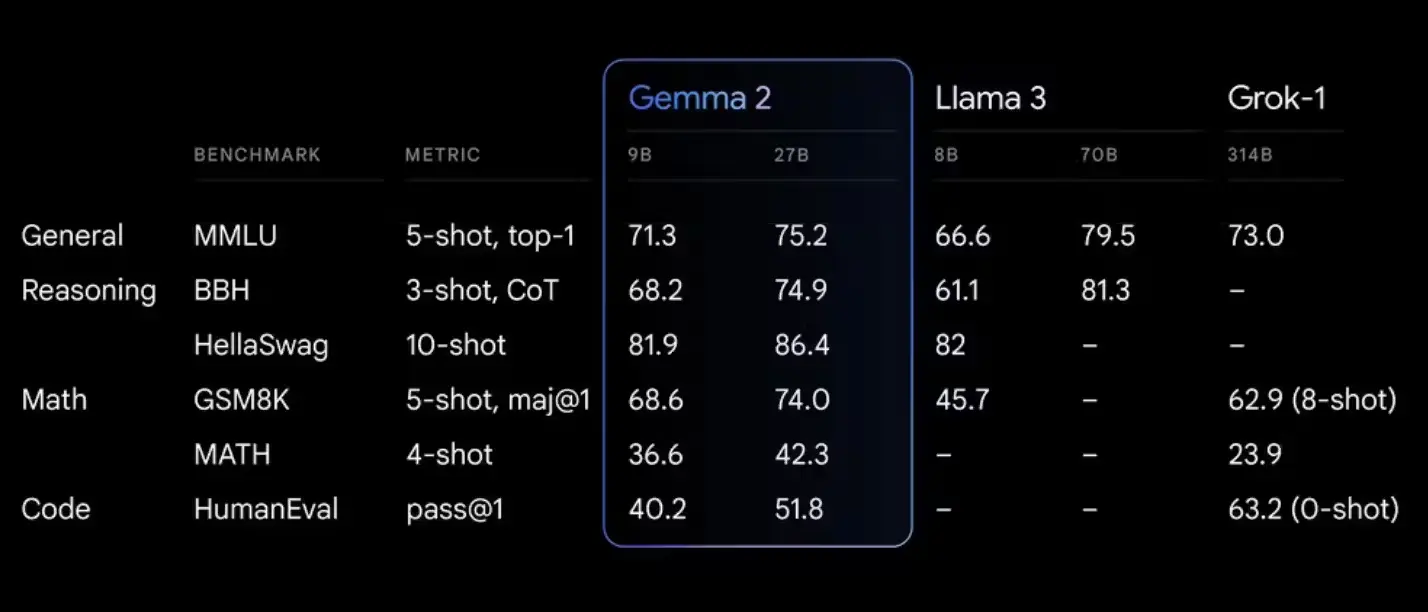

Gemma 2 comes in two sizes with 9B and 27B parameters. In addition, Google announced a 2.6B parameter model. The 27B model offers competitive performance compared to models that are more than twice its size. According to Google, the model 9B outperforms similar models such as the Llama 3 8B.

Our thoughts

Google has launched powerful and competitive open-source LLMs. In addition, it can run on a single NVIDIA H100 Tensor Core GPU or TPU host, significantly reducing deployment costs. We think the model will enjoy great popularity in the open-source community. The model is available on HuggingFace.

More information

- Gemma 2 is now available to researchers and developers - Google Blog

🤖 OpenAI presents CriticGPT to improve ChatGPT’s code output

OpenAI has presented an GPT-4 based AI model, called CriticGPT, that is designed to find errors in ChatGPT’s code output. According to OpenAI’s blog post, people who use both for coding perform 60 % better than people who only use ChatGPT. CriticGPT makes suggestions when errors are detected and asks the user for feedback. OpenAI uses the input to improve ChatGPT.

CriticGPT does not solve the problem of hallucinations, but it should lead to more precise answers. OpenAI named some limitations that the model currently still has. They have trained CriticGPT on short ChatGPT responses. In the future, more complex responses need to be included in the training. In addition, errors in the answers can be very complicated, and these must also be considered in the future. Currently, CriticGPT can only help to a limited level.

Our thoughts

Nowadays, LLMs still make some mistakes, which are known as hallucinations. For this reason, LLMs should not be used in critical areas. We welcome the current progress made by OpenAI, but there is still a long way to go before LLMs work almost perfectly. We will follow the research on this topic further.

More information

-

Finding GPT-4’s mistakes with GPT-4 - OpenAI Blog

-

LLM Critics Help Catch LLM Bugs - OpenAI paper

🤔 Efficient AI language models without matrix multiplication

Researchers from the USA and China have developed language models that don’t need memory-intensive matrix multiplications. According to the paper, the models can compete with modern transformers. Matrix multiplications are responsible for most of the resource requirements and influence the scaling of the models.

Furthermore, the authors provide a GPU-efficient implementation of this proposed MatMul-free model that reduces memory consumption during training by up to 61% compared to a non-optimized baseline solution. By using an optimized kernel during inference, the memory consumption of the model can be reduced by more than ten times compared to non-optimized models.

For more in-depth information, we recommend reading the full paper. In addition, the authors provide the code implementation on GitHub.

Our thoughts

Matrix multiplications (MatMul) are responsible for much of the overall computational cost of large language models (LLMs). The training of LLMs needs a huge amount of energy. For this reason, it is important to find more efficient methods so that AI progress and climate protection can go hand-in-hand.

More information

- Scalable MatMul-free Language Modeling - ArXiv

- MatMul-Free LM - GitHub repo

Magic AI tool of the week

The tool Snipd allows you to mark highlights in a podcast. This way, you can access the most valuable information from the podcast later. Currently, the tool supports 11 languages (English, German, Spanish, French, …) and more are planned.

If you love podcasts, then try it out!

Step-by-Step Guide

- You hear something interesting.

- Tap your headphones to save it to your library.

- An AI generates transcripts, summaries, and titles.

- Export your saved highlights to your favorite app like Notion*.

Articles of the week

- Mistral’s Codestral: Create a local AI Coding Assistant for VSCode

- 7 Hidden Python Tips for 2024

- Responsible Development of an LLM Application + Best Practices

💡 Do you enjoy our content and want to read super-detailed articles about AI? If so, subscribe to our blog and get our popular data science cheat sheets for FREE.

Thanks for reading, and see you next time.

- Tinz Twins

P.S. Have a nice weekend! 😉😉