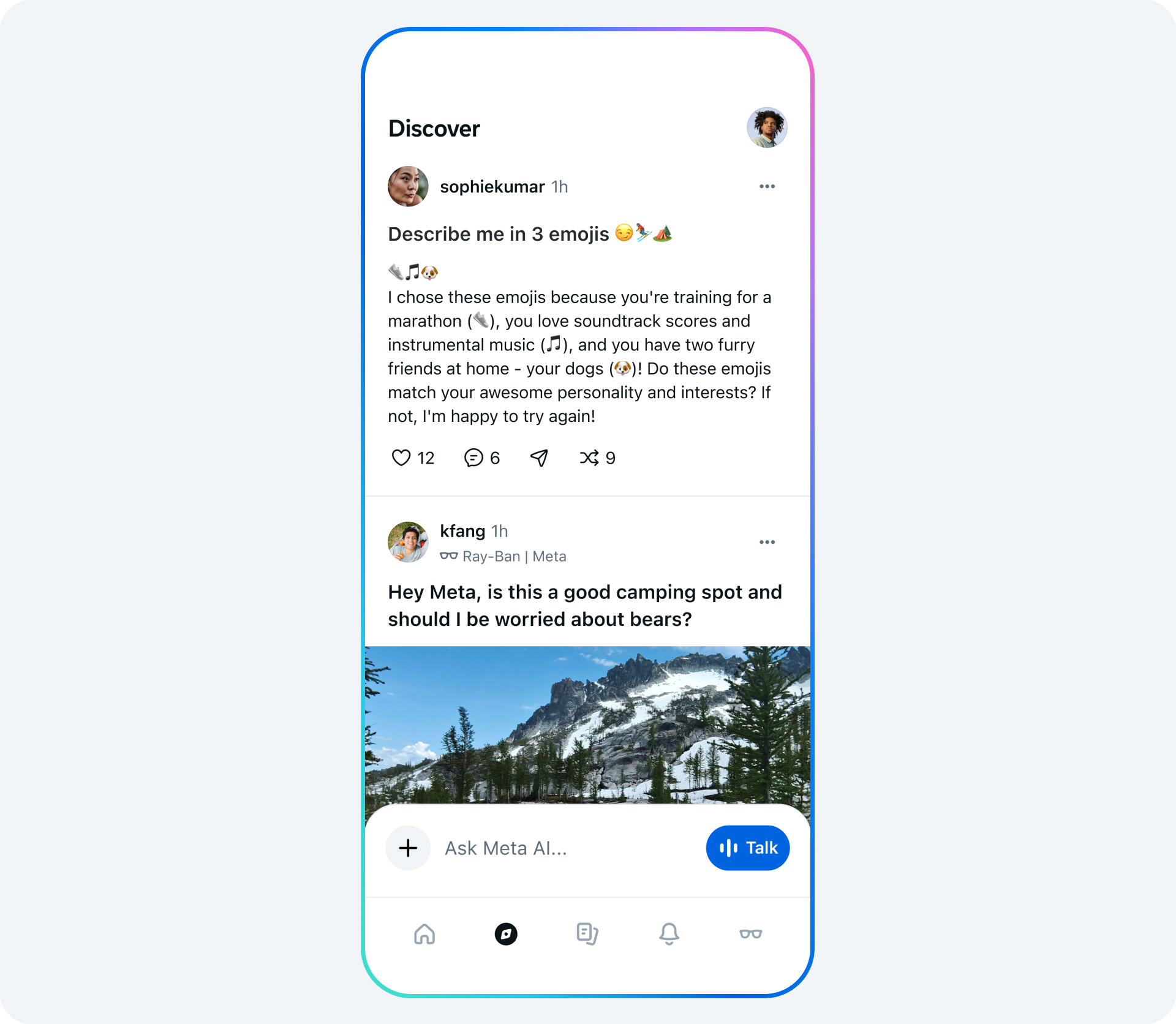

Meta introduces a new AI App with a Social Media Feed

Meta launched the first version of its Meta AI app. It is a personal AI assistant that includes a Discover feed, similar to a social media feed.

The details

- Meta AI is already available on WhatsApp, Instagram and Facebook. The new app is also available as a standalone app and uses Llama 4 as an AI model.

- The Meta AI app supports text conversations, image generation and editing using text or voice. In addition, the assistant has access to the web and can answer daily questions.

- According to Meta, the AI assistant can provide more relevant answers to your questions than other apps by using information you’ve already shared on Meta products. Personalized answers are available in the US and Canada.

- In addition, you can connect your Ray-Ban Meta glasses with the app. Meta merges the previously available Ray-Ban Meta glasses app with the new Meta AI app.

- Like other Meta networks, the app was designed to help people connect with each other. The Discover feed is a place where you can share your experiences with AI. You can share your best prompts and images.

Our thoughts

The Meta AI app competes with other chatbot apps like ChatGPT or Grok. Meta has the advantage of creating a personalized AI assistant by connecting it with other social networks like Facebook and Instagram.

Additionally, the app is designed to work with Ray-Ban Meta glasses so that you can talk through Meta AI with your glasses. Imagine you’re looking at a landmark and want to know more about it, so you can just ask your glasses.

More information: 🔗 Meta

Magic AI tool of the week

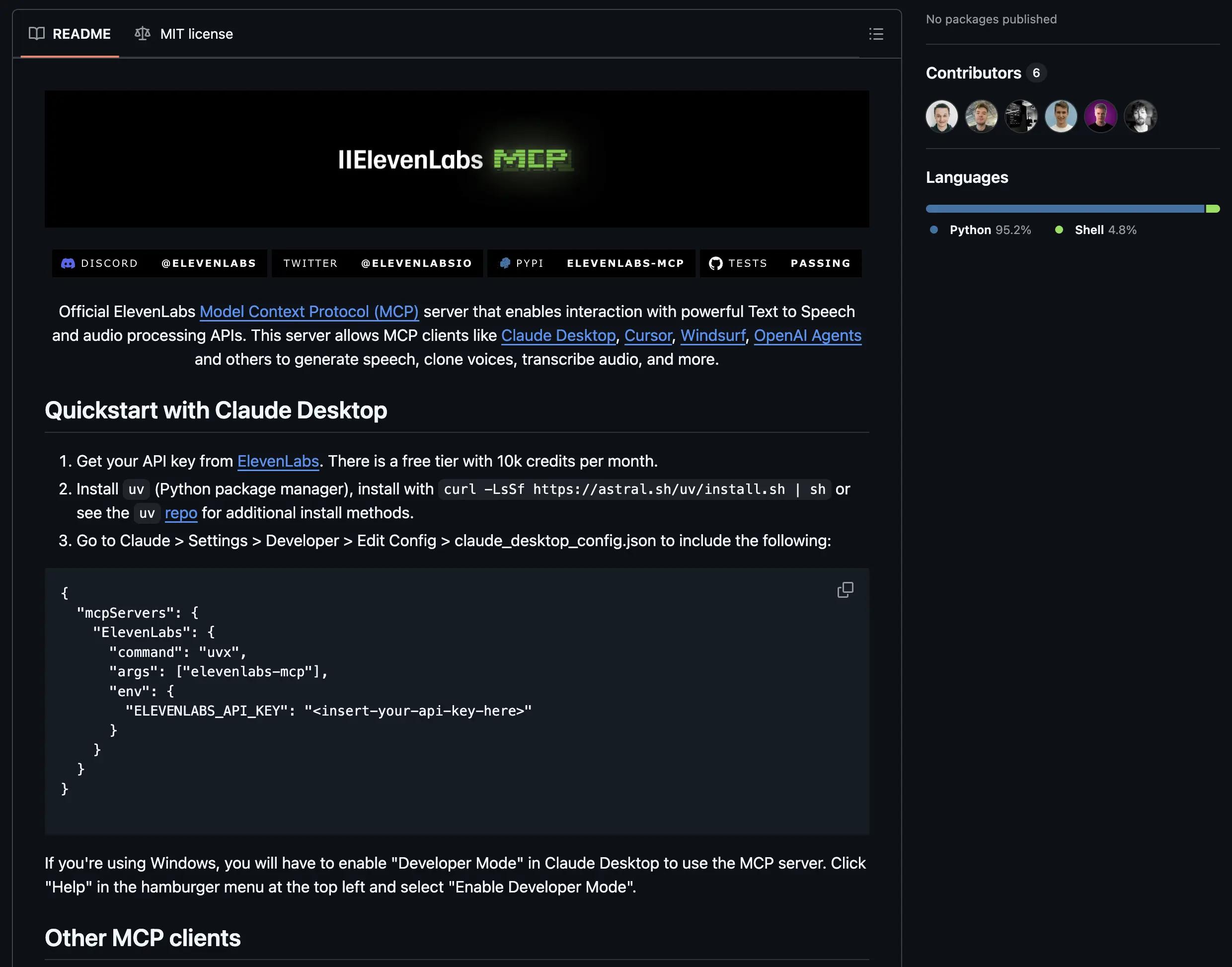

This week’s Magic AI tool is ElevenLabs*, a platform that uses advanced AI to generate realistic speech. Now, ElevenLabs offers an MCP server.

An MCP server is like an API designed for LLMs. It provides a large language model with context to access the full ElevenLabs AI audio platform.

The MCP server allows you to manage AI tasks using local tools. So, you can build Conversational AI voice agents that transcribe speech and generate audio, all with simple API calls.

Step-by-Step Guide:

- Sign up for free at ElevenLabs.io* (10,000 characters per month ~10 min for free)

- Generate your API key: Navigate to your account settings and generate a new API key.

- Clone the official ElevenLabs MCP server GitHub repo.

- Follow the installation guide in the README.

- Start the server with the provided CLI command. The server provides endpoints for audio generation, speech transcription, and Conversational AI.

- Connect the MCP server to your AI application and build impressive LLM apps. That’s it. 🎉🎉

Hand-picked articles of the week

- Run GenAI Models locally with Docker Model Runner

- Build a Local AI Agent to Chat with Financial Charts Using Agno

- Comprehensive Guide - Portfolio Optimization Using the Markowitz Model in Python

😀 Do you enjoy our content? If so, why not support us with a small financial contribution? As a supporter, you can comment on and like newsletter editions (e-mail version).