Microsoft releases new small reasoning models

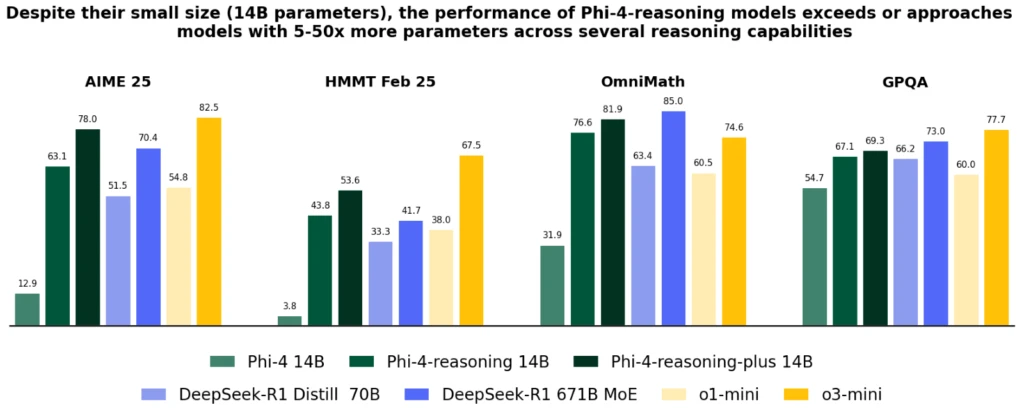

Microsoft launched three new open-weight reasoning models of its Phi-4 family, which outperform larger models in some benchmarks. This release demonstrates what is possible with small models.

The details

- Microsoft uses distillation, reinforcement learning, and high-quality data to balance size and performance in the Phi-reasoning models. The Phi-4-reasoning models have 14B parameters and open weights. There is also a Phi-4-mini-reasoning model with 3.8B parameters.

- The new models are small enough for low-latency environments and have strong reasoning abilities that rival much larger models. In addition, this allows you to run complex reasoning tasks on resource-limited devices like phones.

- All three small language models (Phi-4-mini-reasoning, Phi-4-reasoning, Phi-4-reasoning-plus) are available on Hugging Face.

Our thoughts

Small language models are becoming increasingly powerful, making it possible to use them efficiently on laptops and smartphones locally. This enables the use of powerful AI models in privacy-first environments. For example, we use local AI models in software development with the tool Goose by Block.

More information: 🔗 Microsoft

Magic AI tool of the week

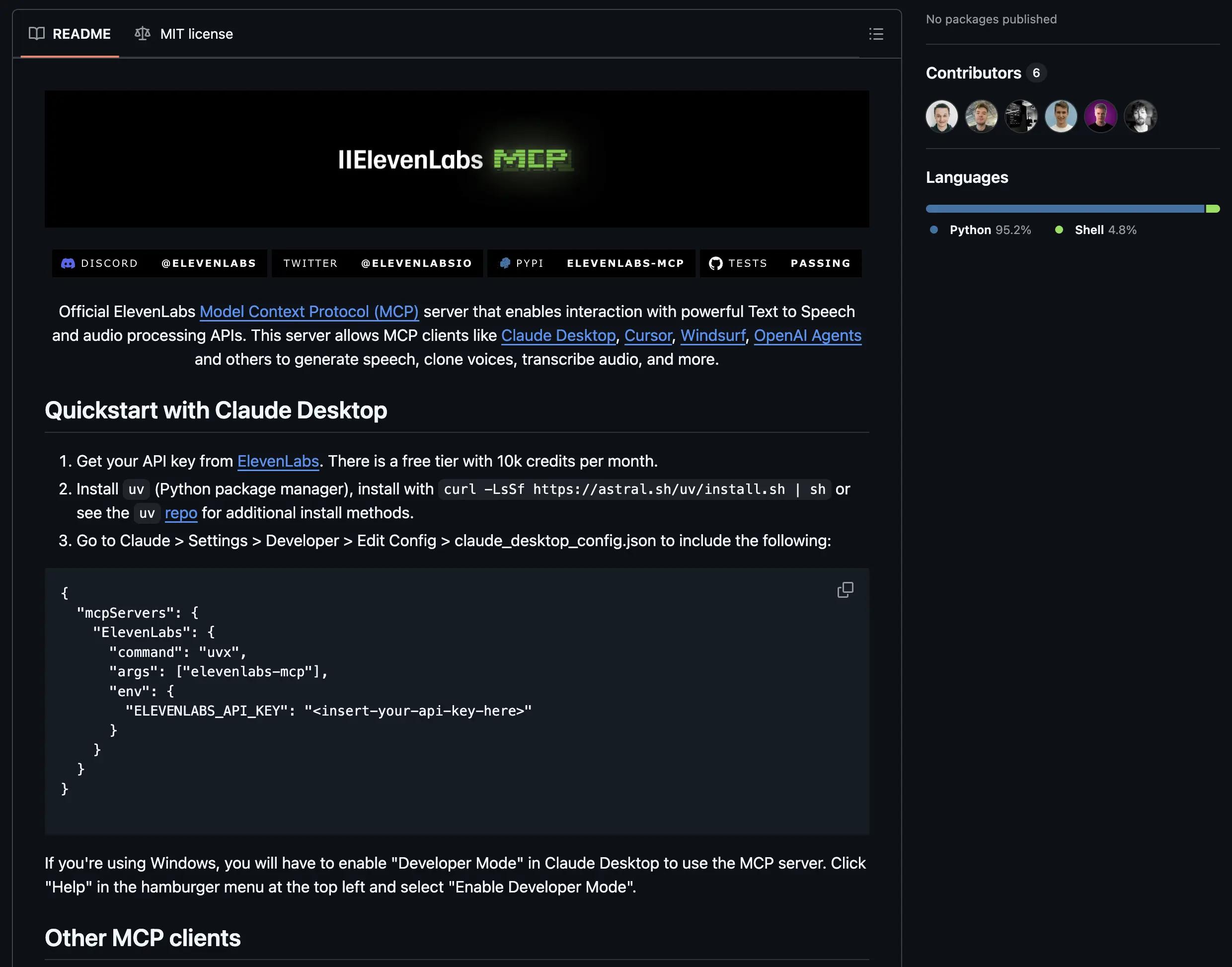

This week’s Magic AI tool is ElevenLabs*, a platform that uses advanced AI to generate realistic speech. Now, ElevenLabs offers an MCP server.

An MCP server is like an API designed for LLMs. It provides a large language model with context to access the full ElevenLabs AI audio platform.

The MCP server allows you to manage AI tasks using local tools. So, you can build Conversational AI voice agents that transcribe speech and generate audio, all with simple API calls.

Step-by-Step Guide:

- Sign up for free at ElevenLabs.io* (10,000 characters per month ~10 min for free)

- Generate your API key: Navigate to your account settings and generate a new API key.

- Clone the official ElevenLabs MCP server GitHub repo.

- Follow the installation guide in the README.

- Start the server with the provided CLI command. The server provides endpoints for audio generation, speech transcription, and Conversational AI.

- Connect the MCP server to your AI application and build impressive LLM apps. That’s it. 🎉🎉

Hand-picked articles of the week

- Run GenAI Models locally with Docker Model Runner

- Build a Local AI Agent to Chat with Financial Charts Using Agno

- Comprehensive Guide - Portfolio Optimization Using the Markowitz Model in Python

😀 Do you enjoy our content? If so, why not support us with a small financial contribution? As a supporter, you can comment on and like newsletter editions (e-mail version).