Midjourney introduces its first video model

Midjourney released the company’s first “Image-to-Video“ model, called V1. The new model can animate any image into a 5-second video.

The details

- The model provides an automatic and manual animation setting. In the manual setting, users can describe how things move in the scene.

- V1 can animate images from Midjourney and images from other sources. In addition, users can extend generated videos up to four times in total (each about 4 seconds).

- Midjourney charges about 8 times more for a video job than for an image job. Each job produces four 5-second videos. According to Midjourney, it is 25 times cheaper than other models.

- According to the CEO, Midjourney’s mission is to build real-time 3D models. For this, they need four building blocks: image models, video models, 3D models, and real-time models.

Our thoughts

There are many video models available now, but this model creates videos in the Midjourney style. Midjourney is a great tool for creating images and videos. However, AI image and video generators also pose risks, like deepfakes from conflict areas, which can spread misinformation.

More information: 🔗 Midjourney

Magic AI tool of the week

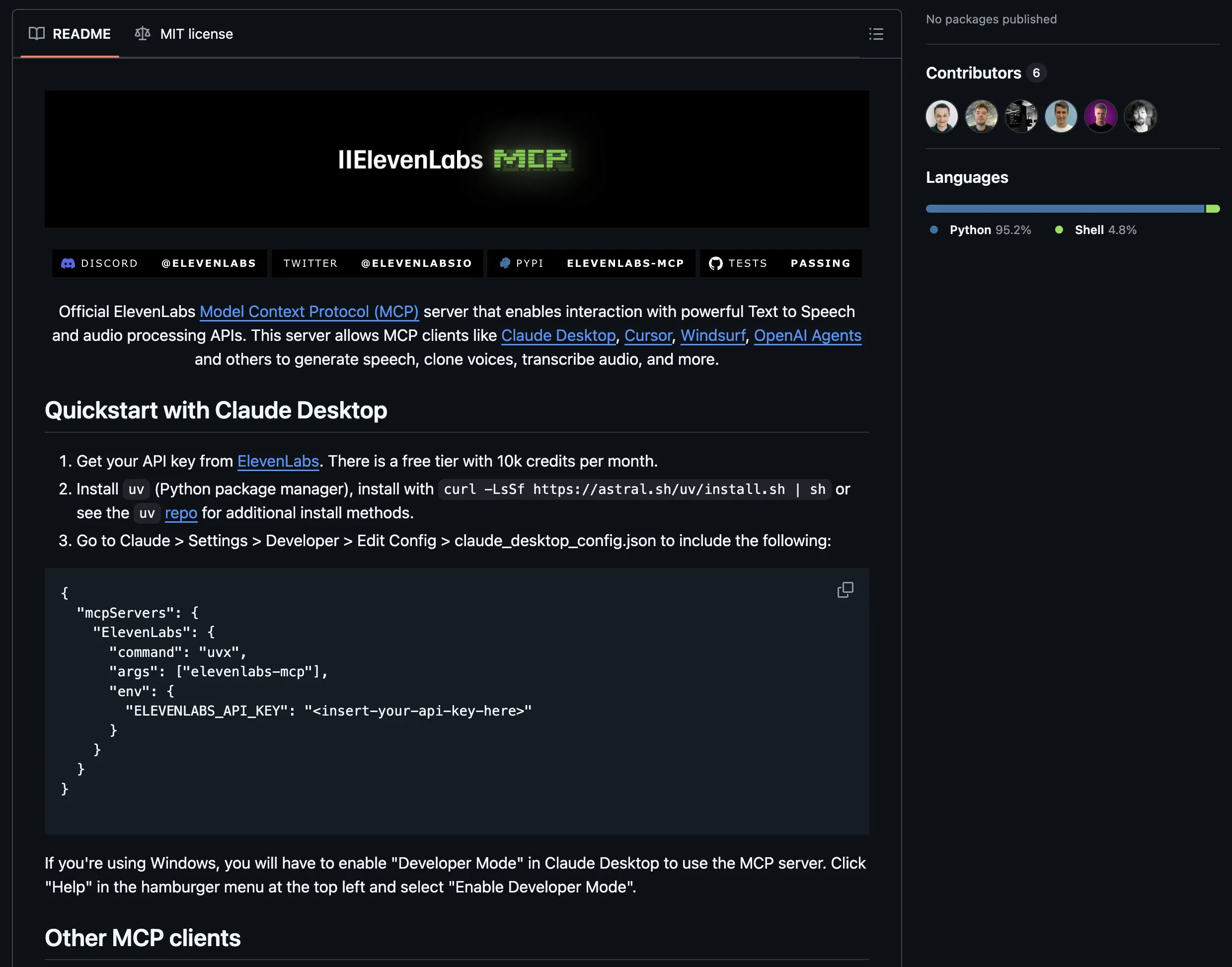

This week’s Magic AI tool is ElevenLabs*, a platform that uses advanced AI to generate realistic speech. Now, ElevenLabs offers an MCP server.

An MCP server is like an API designed for LLMs. It provides a large language model with context to access the full ElevenLabs AI audio platform.

So, you can build Conversational AI voice agents that transcribe speech and generate audio, all with simple API calls.

Step-by-Step Guide:

- Sign up for free at ElevenLabs.io* (15 minutes of Conversational AI)

- Generate your API key: Navigate to your account settings and generate a new API key.

- Clone the official ElevenLabs MCP server GitHub repo.

- Follow the installation guide in the README.

- Start the server with the provided CLI command. The server provides endpoints for audio generation, speech transcription, and Conversational AI.

- Connect the MCP server to your AI application and build impressive LLM apps. That’s it. 🎉🎉

Hand-picked articles

- Run GenAI Models locally with Docker Model Runner

- Portfolio Allocation - How to Analyze a Stock Portfolio Using Python

- Mastering the Capital Asset Pricing Model (CAPM) Using Python

😀 Do you enjoy our content? If so, why not support us with a small financial contribution? As a supporter, you can comment on and like newsletter editions (e-mail version).