Mistral’s Codestral - A New LLM Designed for Coding

More topics: xAI plans a supercomputer for AI and Performing financial statement analysis with LLMs

Hi AI Enthusiasts,

Welcome to this week’s Magic AI News, where we present you the most exciting AI news of the week.

This week’s Magic AI tool is an AI-powered coding assistant in VSCode based on Mistral’s codestral. This AI tool can speed up your coding workflow. Stay curious! 😎

Let’s explore this week’s AI news and Magic AI tool together. 👇🏽

Top AI news of the week

🤖 xAI plans a supercomputer for AI

The AI start-up xAI has received six billion US dollars in funding. According to the blog post, “The funds from the round will be used to take xAI’s first products to market, build advanced infrastructure, and accelerate the research and development of future technologies.”. Now, xAI has a valuation of 24 billion US dollars.

In addition, Elon Musk, the founder of xAI, announced that his AI start-up xAI wants to build a supercomputer for AI. According to the tech portal The Information, xAI wants to connect 100,000 H100 GPUs from NVIDIA and plan to get the supercomputer operational by fall 2025.

Our thoughts

The demand for AI hardware continues unabated. Microsoft and OpenAI have also discussed a ‘Stargate’ cluster even bigger than Elon Musk’s proposal.

In our view, we will see a massive increase in computing power over the next few years. Soon, every laptop will be an AI computer. The direction of Big Tech is clear: all in on AI!

More information

- Elon Musk’s xAI valued at $24 bln after fresh funding - Reuters

- Musk Plans xAI Supercomputer, Dubbed ‘Gigafactory of Compute’ - The Information

📈 Study: Performing financial statement analysis with LLMs

Researchers from the University of Chicago investigated if “an LLM can successfully perform financial statement analysis in a way similar to a professional human analyst”.

Here are the key points:

- The researchers provided GPT-4 standardized and anonymous financial statements for an analysis of future earnings.

- The LLM outperforms financial analysts to predict earnings changes without any industry-specific information.

- The LLM also achieve better results than other state-of-the-art models.

- The results suggest that LLMs can “take a central role in decision-making”.

In addition, the researchers created a custom GPT for ChatGPT Plus users to showcase the capabilities of state-of-the-art LLMs in financial analysis.

Our thoughts

That’s an interesting study about the use of LLMs for financial statement analysis. The study shows that AI will dramatically change the way how investors analyze the stock market. In the future, everyone will have access to a personal financial analyst. That will change how private investors operate on the market.

More information

👨🏽💻 Mistral’s Codestral: A New LLM Designed for Coding

On Wednesday, the Mistral AI team unveiled their first-ever code model called Codestral. It is an open-source AI model designed for code generation tasks.

Here are the key points:

- Codestral is trained on 80+ programming languages such as Python, Java, C, C++, …

- Codestral can complete coding functions and write tests. It’s an assistant for developers.

- 22B parameters and a context window of 32k

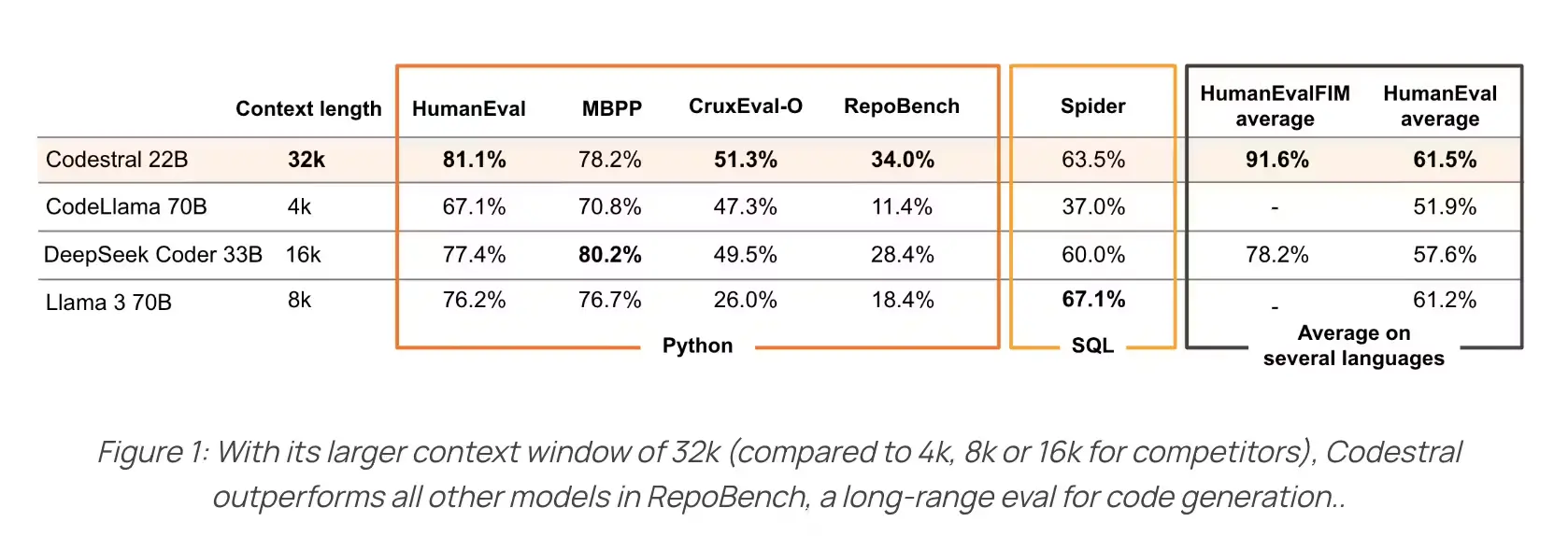

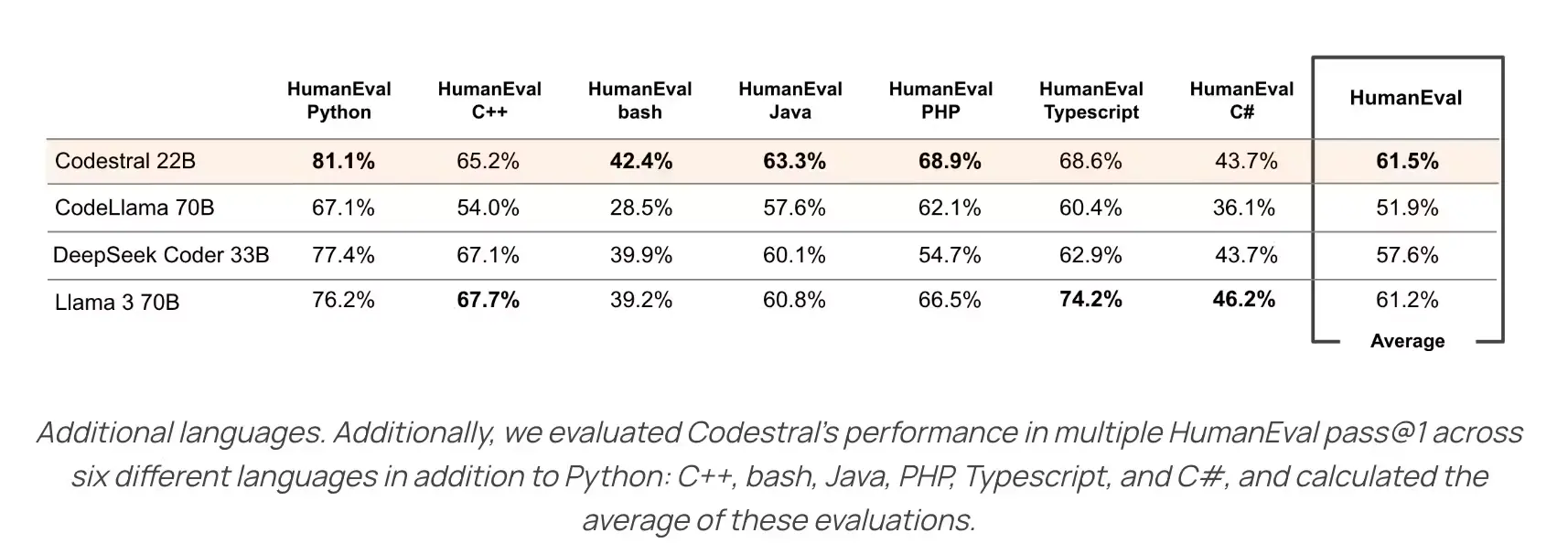

Codestral outperforms previous code models in several benchmarks. Take a look at the following results.

Our thoughts

Mistral’s new code model has an impressive performance. Best of all, you can use the model in VSCode with Continue.dev or Tabnine. These integrations enable developers to generate and chat with their code using state-of-the-art models.

Models for coding tasks are getting better and better and are accessible to everyone. If you don’t want to use an API and want to create a local coding assistant (including Mistral’s Codestral), check out today’s Magic AI tool.

More information

- Codestral: Hello, World! - Mistral AI News

Magic AI tool of the week

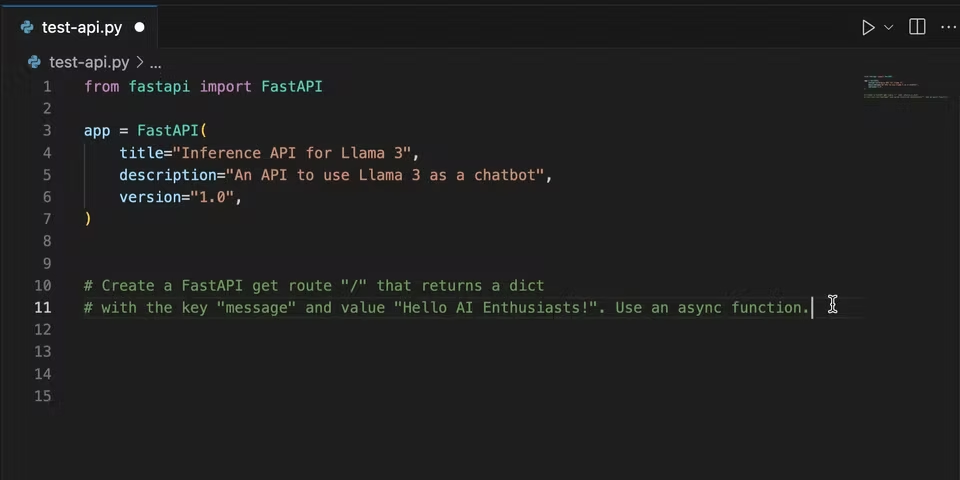

Do you ever want a local AI coding assistant? Yes, then you are here in the right place! Not a long time ago, most coding assistants were only available via an API of a big provider like OpenAI or Anthropic. Those days are a thing of the past.

Now, you can run state-of-the-art LLMs like Llama 3, Phi 3, or codestral locally on your laptop. The VSCode plugin continue, and the open-source tool Ollama makes it happen.

To learn how to create an AI coding assistant, you should check out our latest blog article. After reading our article, you can chat with your codebase in VSCode and can use functions like autocompletion:

👉🏽 Learn more in our article “Mistral’s Codestral: Create a local AI Coding Assistant for VSCode”!

Articles of the week

- LlamaIndex: Use the Power of LLMs on Your Data

- Create Engaging Charts Using Plotly Express in Python

- Responsible Development of an LLM Application + Best Practices

💡 Do you enjoy our content and want to read super-detailed articles about AI? If so, subscribe to our blog and get our popular data science cheat sheets for FREE.

Thanks for reading, and see you next time.

- Tinz Twins

P.S. Have a nice weekend! 😉😉