OpenAI releases open safety reasoning models

OpenAI has introduced two new open-weight models, called gpt-oss-safeguard (20b and 120b). Both models are designed for companies that prioritize safety.

The details

-

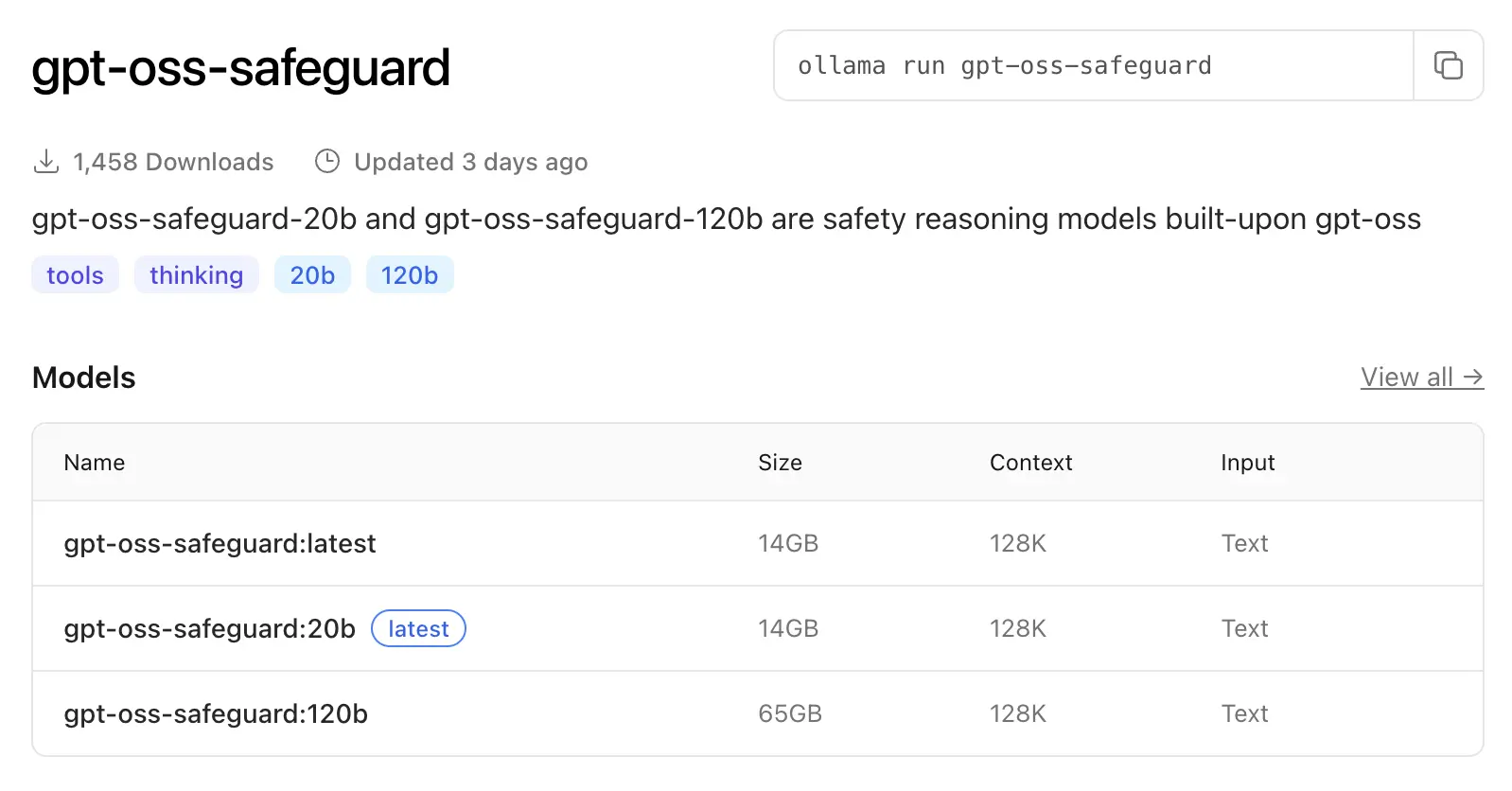

The new gpt-oss-safeguard models come in two sizes (20b and 120b parameters). Both models are fine-tuned versions of the gpt-oss open models. The new models are available under the same permissive Apache 2.0 license as gpt-oss, which allows developers to use, modify, and deploy them freely.

-

In addition, the new models use reasoning to directly interpret a policy provided by the developer at inference. The developer can always decide which policy to use, making the responses more relevant.

-

According to OpenAI, the models use a chain-of-thought process that developers can review to understand how the model makes its decisions.

-

OpenAI collaborated with ROOST to develop gpt-oss-safeguard. ROOST (Robust Open Online Safety Tools) is a nonprofit open-source initiative that develops and distributes accessible AI safety tools to help organizations build scalable, resilient, and responsible safety infrastructure.

-

The gpt-oss-safeguard models are available via Hugging Face and Ollama.

Our thoughts

We have already tested gpt-oss in our step-by-step guides and think it is a great model for the open-source community. In addition, you can use gpt-oss-safeguard freely without copyleft restrictions or patent risk. That makes it ideal for experimentation, customization, and commercial deployment.

More information: 🔗 OpenAI

Magic AI tools

Boost Your Productivity with AI

Explore the best AI tools to boost your efficiency and productivity.

AI Tutorials for Devs

- An Introduction to Anthropic’s Model Context Protocol (MCP) with Python

- Create a Local AgentOS with Agno in Under 30 Lines of Python Code

- Building a Full-Stack Chatbot App in Python Using Reflex and Agno

😀 Do you enjoy our content? If so, why not support us with a small financial contribution? As a supporter, you can comment on and like newsletter editions (e-mail version).