Python is the most widely used programming language in 2024

More topics: Apple invests in building M4 AI servers | Grok can now understand images and has an API

Hi AI Enthusiasts,

Welcome to this week’s Magic AI News, where we present you the most exciting AI news of the week. Today, we are talking about the top programming languages on GitHub and Apple’s M4 AI servers for Apple Intelligence. Stay curious! 😎

This week’s Magic AI tool is an AI Assistant with multi-user support. You can chat with your documents locally and use AI Agents - no frustrating setup is required. This tool speeds up your productivity from day one!

Let’s explore this week’s AI news together. 👇🏽

Top AI news of the week

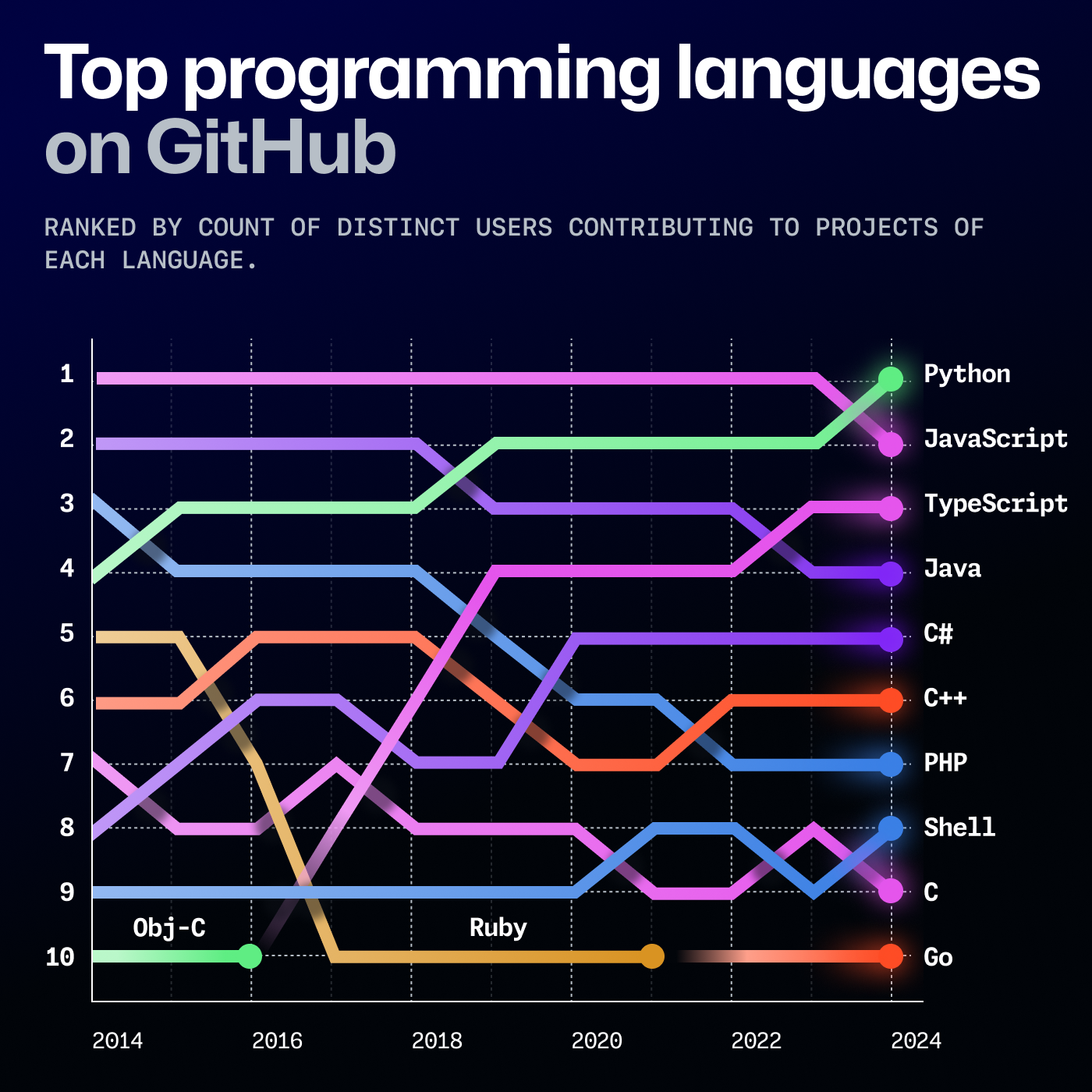

🐍 Python is the most widely used programming language

A report from GitHub (Octoverse report) shows that Python is crucial for developers in AI and data science, making it more popular.

The details:

- In 2024, Python surpasses JavaScript as the most widely used programming language for the first time.

- Jupyter notebooks are also very popular with data scientists, showing a 92% increase from the previous year.

- In 2024, the number of contributions to generative AI projects on GitHub increased by 59%, and the total number of projects by 98%.

- More developers are joining GitHub to participate in open-source projects.

Our thoughts

Wow, Python is now #1. For years, we’ve been saying that Python is the best choice for most software projects. The trend shows that many developers use Python more often than other languages.

This is due to Python’s extensive ecosystem. There are packages for almost every use case, from home automation to web development. Python is a popular programming language for both beginners and experienced developers.

Did you know that some well-known websites were built with Python? For example, Instagram, Google, Spotify, Netflix, Pinterest, or Reddit.

More information: 🔗 GitHub

🤖 Apple invests in building M4 AI servers

Apple wants to use the new M4 chips in its Apple Intelligence infrastructure. According to the Japanese business news agency Nikkei, Apple has reportedly given Foxconn the task of producing M4 chips.

The details:

- Like other companies, Apple relies on cloud providers like Google Cloud and Amazon Web Services for iCloud.

- However, Apple requires its own cloud infrastructure for the announced Private Cloud Compute approach.

- According to Apple, as many Apple Intelligence requests as possible are processed locally on the device. However, when the workloads become too complex, the task is offloaded to the Apple Cloud.

Our thoughts

Apple is preparing to roll out many new AI features. Many people wonder why it takes Apple so long. Well, Apple probably doesn’t have enough computing power to roll out all the features right now.

In the past, Apple has primarily relied on other cloud providers such as Google Cloud and Amazon Web Services. Now, Apple is starting to build its own AI data centers.

In our view, this is a good move, as it makes Apple less dependent on Google and Amazon. In addition, this will make Apple products more secure because of its Private Cloud Compute approach.

More information: 🔗 Heise Online | Apple Magazine

💬 Grok can now understand images and has an API

xAI has announced the availability of a new API. This API gives developers access to Grok foundation models. In addition, users can now upload images to Grok, and the models can analyze these images.

The details:

- Developers can use the new API since November 4.

- It’s a public beta program until the end of 2024. Everyone gets $25 free credits per month.

- The REST API is fully compatible with the SDKs of OpenAI and Anthropic. This makes migration simple. Learn more in the developer documentation.

Our thoughts

It is impressive how fast xAI releases new functionalities. The new API gives you access to grok-beta. It is a model that performs similarly to Grok 2 but is more efficient, faster and has better capabilities. And best of all, you can use the OpenAI SDK, so migration is very easy.

According to Elon Musk, Grok should be the best model on the market by the end of this year. We have to wait and see if xAI succeeds.

More information: 🔗 xAI Blog

Magic AI tool of the week

Are you looking for a user-friendly tool that can run state-of-the-art LLMs on your local machine? Just like ChatGPT, but 100 % local and private!

Then, the tool AnythingLLM might be just what you need! It is a desktop application with a suite of tools. You can run state-of-art LLMs locally without an internet connection. It supports custom AI agents and multi-modal models.

Just upload PDF or TXT files and chat with your documents. It’s available for Mac (Apple Silicon and Intel), Windows and Linux. There is also a Docker version with multi-user instance support.

Articles of the week

- Our Journey to Become a Data Scientist

- Dive into Investment Research - A Beginner’s Guide to the OpenBB Platform

- Understand and Implement an Artificial Neural Network from Scratch

- Mastering Time Series Analysis - An Introduction to ETS Models

💡 Do you enjoy our content and want to read super-detailed articles about AI? If so, subscribe to our blog and get our popular data science cheat sheets for FREE.

Thanks for reading, and see you next time.

- Tinz Twins