Weekly Magic AI - 1-Bit-LLMs, Google Search Update, Claude 3

Top AI news of the week, Magic AI tool of the week, and article of the week

Hi AI Enthusiast,

Welcome to our weekly Magic AI, where we bring you the latest updates on Artificial Intelligence (AI) in an accessible way. This week’s Magic AI tool is perfect for all productivity junkies! Be curious! 😀

Top AI news of the week

🤖 Microsoft researchers present 1-bit LLMs

Microsoft researchers have published a new approach in which they have reduced all the parameters of a large language model (LLM) to the three numbers -1, 0, and 1. According to the published paper, the current research paves the way for a new era of 1-bit LLMs. The authors also refer to it in the paper as 1.58-bit LLM because it uses three values.

The presented LLM matches the fully precise transformer LLM with the same model size (i.e. FP16 or BF16). FP16 or BF16 are formats used to save memory. The 1.58-bit LLM is more efficient in terms of latency, memory, throughput, and energy consumption. It sets a new standard and method for training future LLMs, making them both powerful and cost-effective. Furthermore, this approach opens the door for the development of specific hardware optimized for 1-bit LLMs.

Our thoughts

Energy consumption is a critical challenge when training LLMs. The paper demonstrates a new approach that enables the use of AI models with consistent performance and significantly improved energy efficiency.

After the many releases of LLMs, it is time to think about efficiency. You can always buy more powerful hardware. But it is better when you can reduce costs through optimization. The paper is a further step towards efficient LLMs.

More information

🔍 Google Search update against AI spam

Google wants to improve its search engine by better filtering out AI-generated content/spam. In the blog post, Google writes that the March 2024 update “is designed to improve the quality of Search by showing less content that feels like it was made to attract clicks, and more content that people find useful.”

Google has also published a new version of its spam policy. That states that the use of generative AI tools or other similar tools to create many pages without added value for users is not permitted.

The update can be summarized as follows: “There’s nothing new or special that creators need to do for this update as long as they’ve been making satisfying content meant for people.”

Our thoughts

It’s positive that Google is more focused on spam. With the rise of generative AI, getting relevant search results on Google Search is crucial.

More information

- What web creators should know about our March 2024 core update and new spam policies - Google Search Central Blog

- Spam policies for Google web search - Google Search Central Blog

💬 Anthropic released the next generation of Claude

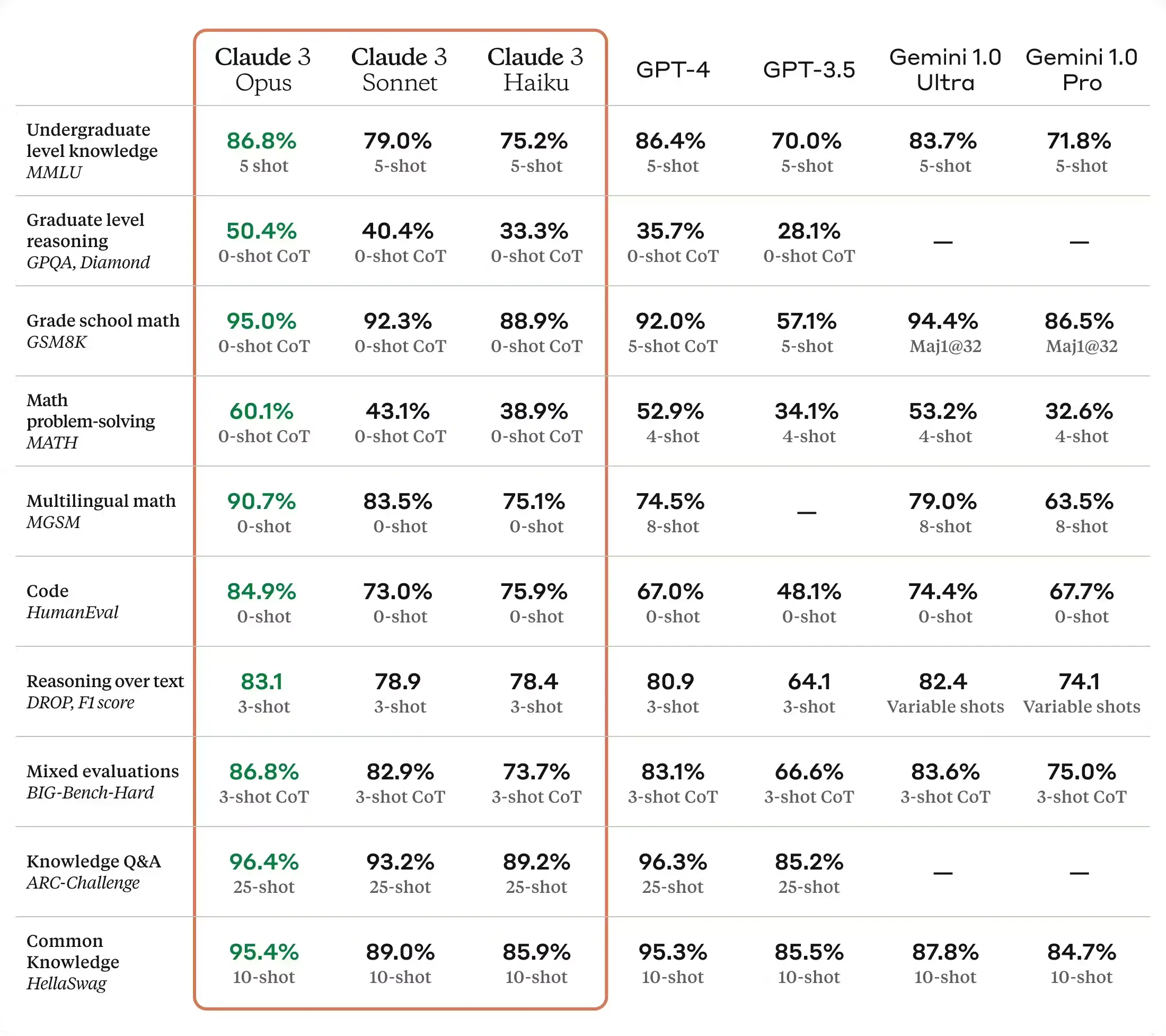

Anthropic announced the Claude 3 family, consisting of Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. According to the blog post, the top-tier model Opus outperforms GPT-4, OpenAI’s most powerful model, in tests.

Haiku is the cheapest model in the Claude 3 family. It performs the worst of all three models in the benchmarks. Opus is the most intelligent and most expensive of the three models. All three models come with a 200K context window, which is the amount that the chatbot can process at once.

Below, you can see the benchmark provided by Anthropic.

You can see that Opus outperforms GPT-4 and Gemini Ultra on benchmarks like reasoning, coding, and mathematics. An API for Opus and Sonnet is already available.

Our thoughts

The results look good, but the models need to be tested in practice. Based on the tests, Anthropic has launched a real competitor to OpenAI and Google. It’s important that there is competition among the leading companies in the AI field because competition brings innovation. However, we are for open-source models, even though the most powerful models are currently closed-source.

AI matters to us all, so we should develop AI models responsibly. Should Big Tech control AI, or do we need more open-source initiatives?

More information

- Introducing the next generation of Claude - Anthropic Blog

Magic AI tool of the week

In today’s modern working world, organizing your work is more crucial than ever! There are many productivity tools out there. We have tested a lot of these tools, but one tool has impressed us. We are talking about Notion. This tool increases our productivity from day one.

Notion combines the functions of a note-taking app, a project management tool, a document editor, and a calendar app. With Notion, you can manage your projects and your time together. And the best, Notion also offers an AI assistant. We wrote an article about Notion AI. Feel free to check it out. 😀

In our opinion, everyone should try Notion. Have you heard about Notion but haven’t tried it yet? Now is the perfect time to do it!

Article of the week

In the fast-paced world of stock trading, analyzing time series data is crucial for making informed decisions. With the help of advanced tools like Pandas, analyzing time series data has become more straightforward. Imagine you need to shift all rows in a DataFrame, a common task in time series analysis. For certain Machine Learning (ML) models, it’s necessary to shift the time series data by a specific number of steps. The Pandas Library excels at this task.

In our article, we look at examples to show how the Pandas shift function works. We’ll use Tesla stock’s time series data as our example dataset. Feel free to check it out!

💡 Do you enjoy our content and want to read super-detailed articles about AI? If so, subscribe to our blog and get our popular data science cheat sheets for FREE.

Thanks for reading, and see you next time.

- Tinz Twins

P.S. Have a nice weekend! 😉😉